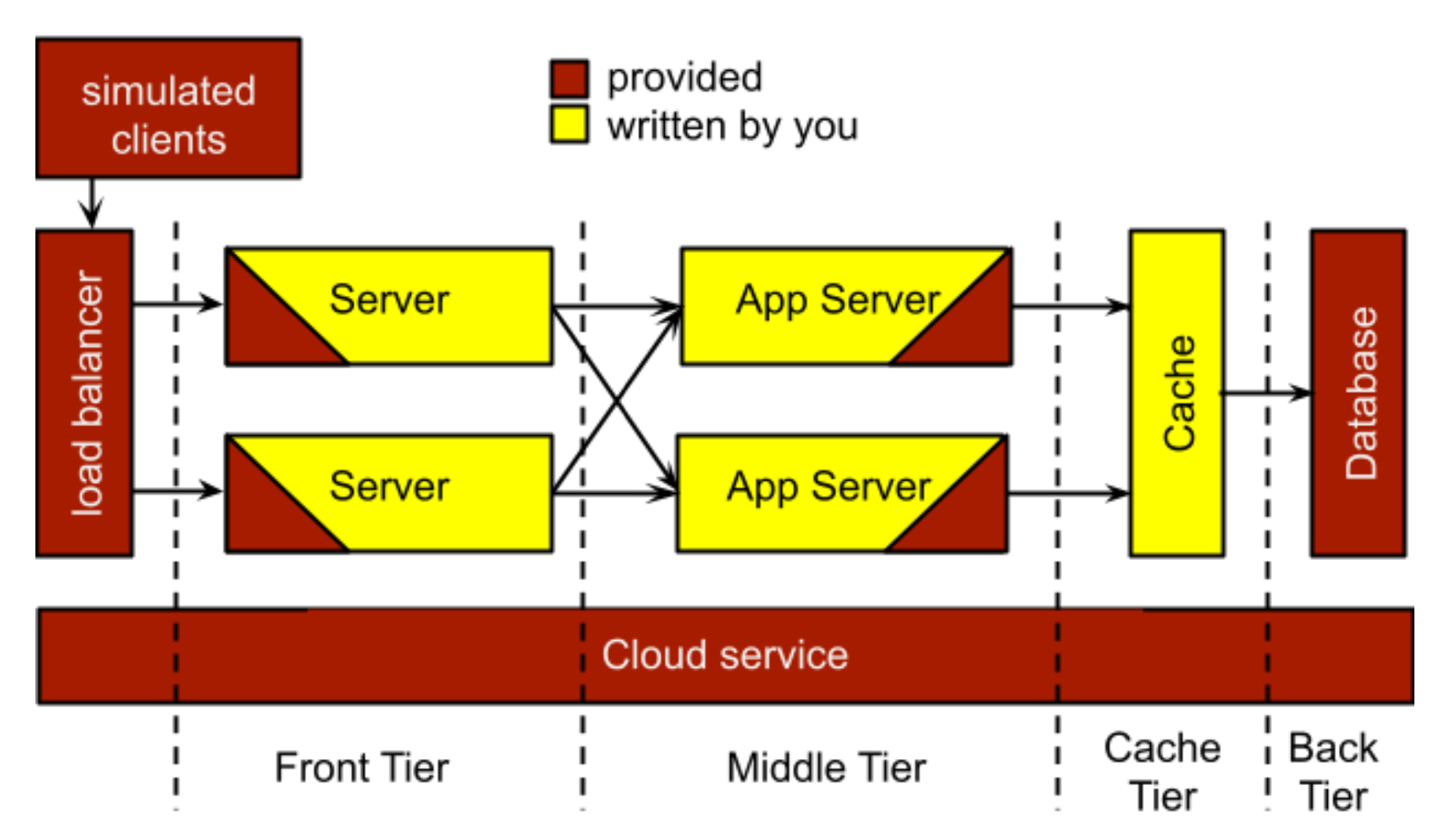

Scalable Web Service project aims at implementing a scalable web service infrastructure and tune it to achieve the best scale out / scale back performance. A typical scalable web service is a 3 (sometimes 4) tiers system shows as follow:

A front-end tier accepts request from load balancer, produces the static contents for this request and pass it to the backend. A back-end iter processes this request by adding dynamic content to it and request required information from cache tier. The cache tier could speed up the interaction with DB tier.

This system structure provides flexibility for scale out and scale back process.

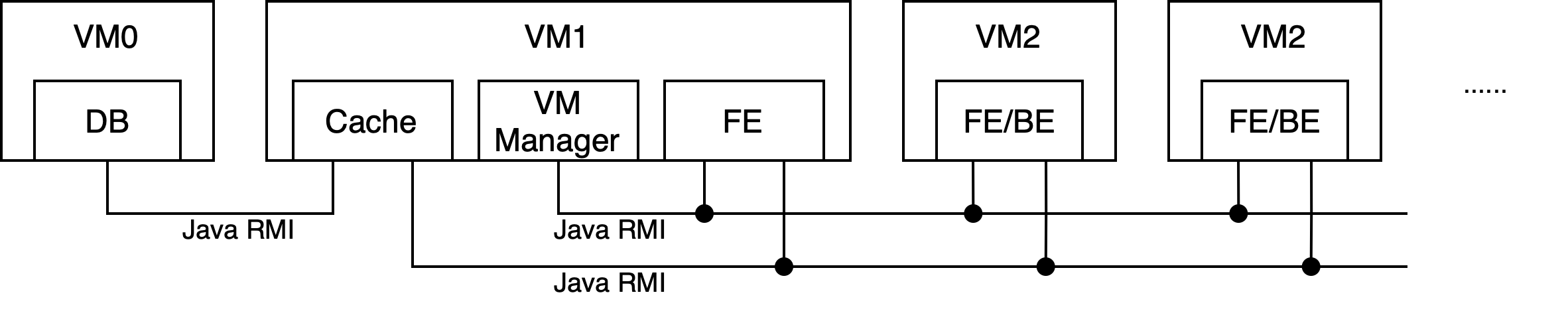

The system structure is shown in the following figure.

Besides the server instance, VM1 will also bring up a VM Manager instance and a Cache instance. They provide coordinate and cache service to all server instances in the cluster. All server instances are coordinated by VM Manager and all back-end servers process requests using cache.

The duty of the server is either to get requests from the balancer (front-end server) or process requests (back-end server). Once been brought up by the cloud, a new server will register itself to VM Manager on VM1 using RMI. VM Manager will assign a role, either front-end or back-end, to it. The server will then follow the built-in state machine to switch between roles according to the command from VM Manager. When serving as a front-end server, it will register itself to the front-end balancer and keeps passing new requests to the middle balancer. When serving as a back-end server, it will register itself to the middle balancer and keeps processing new requests.

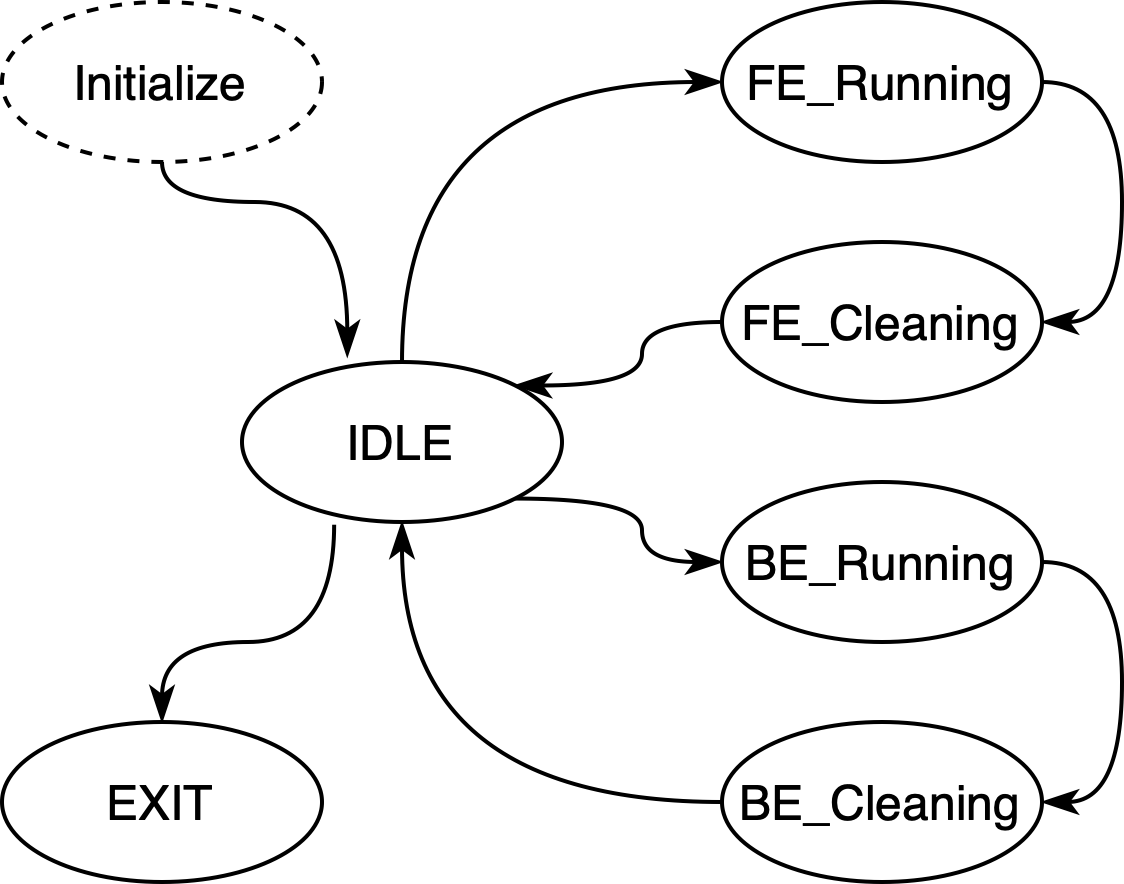

The state machine clearly defines server behavior. The state transfer diagram shows as follows:

By following the state transfer diagram, the server could always make sure tasks are finished and cleaned up. For example, before exiting FE Running state and entering Idle state, a FE Cleaning state makes sure all requests remaining in the queue are processed.

When a new request is added to the front-end queue, the front-end server will record its arrival time. If a request is destined to timeout, it will be dropped, and the processing time will be saved for the non-timeout request.

VM Manager is responsible for all server scheduling tasks, e.g., scaling out, scaling back, changing server role, and load estimation.